Case Studies: Successful Implementations of AWS in Various Industries Introduction Amazon Web Services (AWS) has transformed the way businesses manage and scale their IT infrastructure. From startups to large enterprises, organizations across industries are leveraging AWS to drive innovation, streamline operations, and reduce costs. In this blog, we’ll explore several case studies that showcase how AWS has been successfully implemented across various sectors. By examining these real-world examples, we’ll highlight the power of AWS in transforming operations, increasing efficiency, and driving business growth. Whether you’re in retail, healthcare, or finance, these case studies will provide actionable insights on how AWS can be integrated into your organization. AWS in Retail: Netflix’s Global Streaming Platform Background Netflix, a leader in online streaming, faced significant challenges in scaling its infrastructure to meet the demands of millions of global users. As the company grew, so did the complexity of managing its on-premises data centers. To enhance scalability and agility, Netflix turned to AWS for cloud-based solutions that could handle the global demand for its streaming service. The AWS Solution Netflix’s decision to migrate to AWS was driven by the need for a more flexible and scalable infrastructure. The company moved its entire application stack to AWS, including content delivery, video streaming, and customer management. Key AWS services used include: Amazon EC2 for compute power Amazon S3 for storing large volumes of video content Amazon CloudFront for content delivery AWS Lambda for serverless computing Results Scalability: Netflix’s use of AWS enabled it to handle surges in user demand seamlessly, especially during high-traffic events like new show releases. Cost Efficiency: By moving to the cloud, Netflix reduced its dependency on physical data centers, resulting in significant cost savings. Global Reach: AWS helped Netflix expand its streaming services to over 190 countries with minimal latency. AWS in Healthcare: Philips Healthcare’s Cloud-Based Solutions Background Philips Healthcare, a global leader in health technology, faced challenges in managing and analyzing massive amounts of medical data. They needed a secure and scalable cloud infrastructure to support innovations in telemedicine, diagnostics, and patient care. The company chose AWS to develop its cloud-based healthcare solutions. The AWS Solution Philips Healthcare utilizes a variety of AWS services to power its digital health solutions, including: Amazon EC2 for computing power Amazon S3 for scalable storage AWS IoT to collect data from connected medical devices Amazon Redshift for data analytics Philips also used Amazon SageMaker for developing machine learning models that help improve diagnostics and predict patient outcomes. Results Improved Data Security: By using AWS’s HIPAA-eligible services, Philips ensured the secure storage and processing of patient data. Enhanced Analytics: With AWS, Philips can analyze patient data in real time, leading to faster and more accurate diagnostics. Faster Innovation: The scalability of AWS enabled Philips to quickly deploy new healthcare applications and services. AWS in Finance: Capital One’s Digital Transformation Background Capital One, a major player in the banking industry, has been undergoing a digital transformation to improve customer experience and streamline its internal operations. As part of this transformation, Capital One sought a cloud provider that could deliver security, scalability, and agility. The company turned to AWS to migrate its infrastructure to the cloud. The AWS Solution Capital One migrated several key components of its infrastructure to AWS, including: Amazon EC2 and Amazon RDS for running applications and databases Amazon S3 for secure and scalable data storage Amazon CloudWatch for monitoring applications and infrastructure AWS Lambda for serverless computing and automation By using AWS, Capital One moved away from traditional on-premises data centers to a fully cloud-based infrastructure that could easily scale to meet the demands of its millions of customers. Results Faster Product Development: AWS’s agile environment allowed Capital One to innovate and roll out new products more quickly. Increased Security: Capital One benefited from AWS’s robust security features, such as encryption and compliance with industry standards like PCI-DSS. Cost Reduction: Moving to the cloud eliminated the need for costly data centers and allowed for a pay-as-you-go pricing model. AWS in Manufacturing: Siemens’ Industrial IoT Platform Background Siemens, a global leader in manufacturing and automation, wanted to build an Industrial Internet of Things (IIoT) platform that would enable businesses to monitor and optimize their manufacturing operations. The company needed a scalable and secure cloud infrastructure to support the platform and handle vast amounts of data from industrial equipment. The AWS Solution Siemens turned to AWS to develop its MindSphere IIoT platform, which connects machines, sensors, and other industrial equipment to the cloud. The solution uses several AWS services: Amazon S3 for storing large amounts of sensor data Amazon EC2 for computing and processing data Amazon Kinesis for real-time data streaming AWS IoT Core for managing IoT devices AWS Lambda for serverless computing By leveraging AWS, Siemens was able to provide real-time insights into manufacturing processes, enabling businesses to optimize operations and reduce downtime. Results Operational Efficiency: The MindSphere platform provided businesses with real-time data and insights, leading to improved manufacturing efficiency and reduced costs. Scalability: Siemens was able to scale its IIoT platform to handle data from millions of devices across multiple industries. Predictive Maintenance: Using AWS services like Amazon SageMaker, Siemens developed machine learning models that help predict equipment failures before they occur, reducing downtime. AWS in Media and Entertainment: The New York Times’ Content Delivery Background The New York Times (NYT) needed a cloud infrastructure that could handle massive amounts of content delivery while ensuring fast, reliable, and secure access to users. The company turned to AWS to modernize its infrastructure and provide a better digital experience for readers around the world. The AWS Solution NYT uses several AWS services to support its content delivery: Amazon S3 for storing articles, videos, and images Amazon CloudFront for fast content delivery to users globally AWS Lambda for running code in response to events Amazon RDS for managing database workloads Additionally, NYT leverages AWS Media Services to stream videos and deliver rich multimedia content to readers. Results Improved Performance: AWS helped NYT reduce content load

Author: Admin

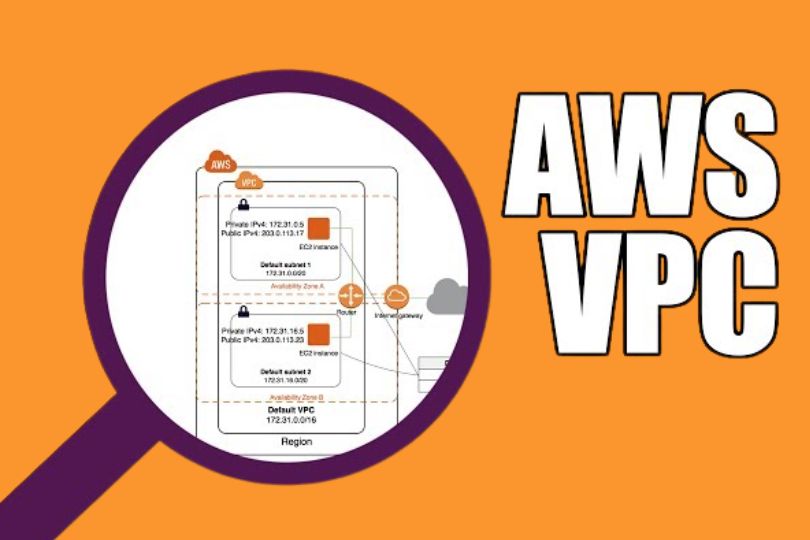

Building a Secure Virtual Private Cloud (VPC) in AWS: A Step-by-Step Guide

Building a Secure Virtual Private Cloud (VPC) in AWS: A Step-by-Step Guide Introduction As businesses increasingly move to the cloud, security remains a top priority. One of the most effective ways to ensure your AWS infrastructure is secure is by setting up a Virtual Private Cloud (VPC). A VPC provides a private, isolated network within AWS, allowing you to control your cloud resources and safeguard your applications. In this blog, we will walk you through the process of building a secure VPC in AWS, including best practices, common pitfalls to avoid, and tips for ensuring robust security. What is a Virtual Private Cloud (VPC)? A Virtual Private Cloud (VPC) is a virtual network that you define in AWS. It resembles a traditional on-premises network, but it exists in the cloud. Within a VPC, you can create subnets, configure route tables, manage IP addressing, and set up firewalls. A secure VPC is essential for ensuring that only authorized users and systems can access your cloud resources, while protecting sensitive data from external threats. Key Components of a Secure VPC Before diving into the setup process, let’s review the critical components that make up a VPC: Subnets: Logical divisions of your VPC’s IP address range, where you can deploy AWS resources (like EC2 instances). Subnets can be public (accessible from the internet) or private (isolated from the internet). Internet Gateway (IGW): A gateway that allows communication between instances in your VPC and the internet. A secure VPC typically uses an internet gateway for public subnets and restricts private subnets from direct internet access. Route Tables: These determine the traffic flow between subnets and other resources in your VPC. Properly configured route tables are crucial for security and ensuring network traffic follows the correct path. Security Groups: Virtual firewalls that control inbound and outbound traffic to your instances. Security groups are stateful, meaning that if you allow inbound traffic, the response is automatically allowed. Network Access Control Lists (NACLs): These are stateless firewalls that provide an additional layer of security at the subnet level. VPC Peering: Allows two VPCs to communicate with each other privately. This is important for cross-region or cross-account communication. VPN and Direct Connect: These services allow you to securely connect your on-premises network to your AWS VPC using a Virtual Private Network (VPN) or dedicated network link. Step-by-Step Guide to Building a Secure VPC in AWS Now that you understand the key components, let’s walk through the steps to set up a secure VPC. Step 1: Define Your Network Architecture Before you create your VPC, it’s important to plan your network architecture. Consider the following: IP Address Range: Choose an appropriate IP range for your VPC (e.g., 10.0.0.0/16). Make sure the range is large enough to accommodate all the resources you plan to deploy. Subnets: Break down your VPC’s IP range into smaller subnets. Typically, you’ll create multiple subnets for different purposes: Public Subnets: For resources that need direct internet access (e.g., load balancers, bastion hosts). Private Subnets: For resources that should not have direct internet access (e.g., databases, application servers). Availability Zones (AZs): AWS VPCs span multiple AZs to provide high availability. Deploy your subnets across at least two AZs for redundancy and fault tolerance. Step 2: Create the VPC Login to the AWS Console: Go to the VPC Dashboard under the Networking & Content Delivery section. Create a New VPC: Select Create VPC and enter the CIDR block (e.g., 10.0.0.0/16). Choose IPv4 CIDR and decide if you need an IPv6 CIDR block. Set Up Subnets: Create subnets for each AZ in your region. For example, create public subnets for load balancers and private subnets for databases. You’ll need to assign a CIDR block for each subnet (e.g., 10.0.1.0/24 for the first subnet, 10.0.2.0/24 for the second). Configure Route Tables: Set up a route table for public subnets that includes a route to the Internet Gateway. For private subnets, you can configure a route table that sends outbound traffic to a NAT Gateway for internet access, or use VPC Peering for communication with other VPCs. Step 3: Attach an Internet Gateway Create an Internet Gateway (IGW): From the VPC Dashboard, go to Internet Gateways, and select Create Internet Gateway. Attach the IGW to Your VPC: Once the IGW is created, attach it to your VPC to allow communication between instances in your public subnet and the internet. Step 4: Set Up Security Groups and NACLs Security Groups: Security groups act as virtual firewalls for your instances. Create security groups with appropriate rules for each type of resource. For public-facing resources (e.g., a web server), allow HTTP/HTTPS traffic from any IP. For private resources (e.g., a database), restrict access to only specific security groups or IP addresses. Network Access Control Lists (NACLs): While security groups are stateful, NACLs are stateless. Apply NACLs at the subnet level to add an extra layer of security. For example, block inbound traffic from all sources to private subnets but allow outbound traffic to the internet through a NAT Gateway. Step 5: Set Up a Bastion Host (Optional) A bastion host is a secure server that you can use to connect to instances in your private subnets. It acts as a jump server, allowing SSH or RDP access to instances in the private subnet without exposing them to the internet directly. Launch an EC2 instance in the public subnet. Configure the security group of the bastion host to allow inbound SSH access from your IP address. Set up the private instances to allow inbound SSH from the bastion host’s security group. Step 6: Enable Monitoring and Logging Once your VPC is set up, it’s essential to monitor and log traffic to ensure security and compliance: AWS CloudWatch: Set up CloudWatch to monitor resource utilization and performance within your VPC. Configure alarms for high CPU usage, low memory, or unusual traffic patterns. VPC Flow Logs: Enable VPC Flow Logs to capture information about IP traffic to and from network interfaces in your VPC. This

How to Migrate Your On-Premises Applications to AWS: A Step-by-Step Guide

How to Migrate Your On-Premises Applications to AWS: A Step-by-Step Guide Introduction Cloud computing has become an essential part of modern business strategies. AWS (Amazon Web Services) is one of the most popular cloud platforms, offering a vast array of services to support businesses in their cloud journey. Migrating on-premises applications to AWS allows organizations to benefit from scalability, cost-efficiency, and improved performance. However, a successful migration requires careful planning and execution. In this blog, we will guide you through the entire process of migrating your on-premises applications to AWS, including common challenges, best practices, and tools to make the transition smooth and efficient. Why Migrate to AWS? Migrating your on-premises applications to AWS offers a variety of benefits: Scalability: AWS provides the ability to scale resources up or down based on demand, ensuring that your applications are always performing optimally. Cost Savings: With AWS, you pay only for what you use, making it a cost-effective solution, especially compared to maintaining expensive on-premises infrastructure. Flexibility: AWS offers a wide range of services, such as compute, storage, and networking, to support different types of workloads. Security: AWS provides robust security features, including data encryption, identity management, and compliance certifications, to protect your applications and data. By migrating to AWS, you not only future-proof your infrastructure but also enhance the overall efficiency and agility of your organization. How to Plan a Successful Migration to AWS Migrating applications to AWS requires careful planning. Here’s a breakdown of the steps involved in the migration process: 1. Assess Your Current Infrastructure Before starting the migration process, assess your existing on-premises environment. Understand the architecture, applications, and workloads that need to be migrated. This includes: Inventory of Applications: Create a list of all applications running on your on-premises servers. Identify critical applications and services that need to be migrated first. Resource Requirements: Determine the compute, storage, and networking resources your applications currently use. This will help you map these resources to the appropriate AWS services. Performance Metrics: Collect performance data such as CPU, memory, and storage usage to ensure that you choose the right AWS resources that meet your application’s needs. Dependencies: Identify interdependencies between applications and databases. Understanding these relationships will help you plan the migration without causing disruptions. 2. Define Your Migration Strategy There are different strategies for migrating to the cloud, and your choice depends on the complexity of the migration and your business needs. AWS provides a framework called the 6 Rs of Cloud Migration to help guide your decision-making: Rehost (Lift and Shift): Move your applications as-is to AWS without making significant changes. This is the quickest migration method and is suitable for applications that require minimal adjustments. Replatform: Make some optimizations or changes to your applications to take advantage of AWS services (e.g., upgrading databases to Amazon RDS). This is often called a “lift-tinker-and-shift” approach. Repurchase: Replace legacy applications with cloud-native applications or services, such as moving to Software as a Service (SaaS). Refactor/Re-architect: Redesign applications to take full advantage of AWS services. This might involve breaking down monolithic applications into microservices. Retire: Decommission outdated applications that are no longer needed or no longer have value for the business. Retain: Keep some applications on-premises while migrating others to the cloud. By choosing the right strategy, you can ensure that your migration is optimized for both cost and performance. 3. Choose the Right AWS Services AWS offers a wide range of services to support your migration. Some of the key services to consider include: Amazon EC2: Virtual servers for running your applications. Amazon RDS: Managed relational databases for SQL workloads. Amazon S3: Scalable object storage for backups and static data. AWS Lambda: Serverless computing for event-driven architectures. Amazon VPC: Virtual private cloud for network isolation and security. Evaluate your application requirements and match them to the appropriate AWS services to ensure efficient resource allocation. 4. Migrate Data and Applications Data migration is a critical part of any cloud migration. Depending on your data size and the amount of downtime allowed, you can choose from several AWS tools: AWS Migration Hub: A centralized place to track the progress of your migration across AWS services. It provides a comprehensive view of your migration journey and helps identify any bottlenecks. AWS Database Migration Service (DMS): This tool helps you migrate databases to AWS with minimal downtime. It supports most major database engines, including MySQL, PostgreSQL, and Oracle. AWS Snowball: For large-scale data migrations, Snowball is a physical device that securely transfers data to AWS. It’s ideal when transferring terabytes or petabytes of data. AWS DataSync: A managed service that simplifies and accelerates data transfer between on-premises storage and AWS. 5. Test and Validate Your Migration Once your data and applications are moved to AWS, it’s crucial to test and validate the migration. This step ensures that everything is working as expected before going live: Functionality Testing: Ensure that all applications and services are functioning correctly in the AWS environment. Performance Testing: Compare the performance of applications before and after migration to ensure they meet the required standards. Security and Compliance: Check that security configurations, such as encryption and access control, are properly implemented and compliant with industry standards. Post-Migration Best Practices Once your applications are successfully migrated to AWS, there are a few post-migration activities that will help optimize your environment: 1. Optimize for Cost and Performance AWS provides a pay-as-you-go model, but it’s important to optimize your resources to avoid unnecessary costs. Some tips include: Right-sizing: Ensure that the instance types and sizes match your application’s requirements to avoid over-provisioning. Auto Scaling: Use Auto Scaling to automatically adjust resources based on demand. This helps optimize cost and performance by scaling up during traffic spikes and down during periods of low usage. AWS Trusted Advisor: Use AWS Trusted Advisor to identify cost optimization opportunities and other areas for improvement in your AWS environment. 2. Implement Monitoring and Logging Use AWS monitoring and logging tools to ensure the health and performance of your migrated

Maximizing Performance with AWS Elastic Load Balancing: A Guide for Seamless Scalability

Maximizing Performance with AWS Elastic Load Balancing: A Guide for Seamless Scalability Introduction In today’s world of cloud computing, ensuring that your applications remain available, scalable, and resilient is paramount. One of the key services offered by Amazon Web Services (AWS) to achieve this is Elastic Load Balancing (ELB). ELB plays a crucial role in distributing incoming traffic across multiple resources, like EC2 instances, containers, and IP addresses, thereby optimizing application performance and availability. Whether you’re running a small startup’s website or an enterprise-grade application, AWS ELB can help ensure that your traffic is efficiently distributed, providing high availability and fault tolerance. This blog will explore the ins and outs of AWS Elastic Load Balancing, its different types, how it works, and the best practices for maximizing performance using ELB. What is AWS Elastic Load Balancing (ELB)? AWS Elastic Load Balancing automatically distributes incoming application traffic across multiple targets, such as Amazon EC2 instances, containers, and IP addresses. ELB ensures that no single resource becomes overwhelmed by traffic, thereby improving both the scalability and availability of applications. There are three main types of load balancers provided by AWS ELB: Application Load Balancer (ALB): Best suited for HTTP/HTTPS traffic, providing advanced routing capabilities for microservices and container-based applications. Network Load Balancer (NLB): Designed for high-performance applications that require low latency, capable of handling millions of requests per second. Classic Load Balancer (CLB): The original AWS load balancer, designed for simple applications but with limited features compared to the newer ALB and NLB. By utilizing these different load balancing options, businesses can enhance their application’s fault tolerance, improve user experience, and scale effortlessly. Benefits of AWS Elastic Load Balancing Here’s how AWS ELB can help maximize performance for your application: 1. High Availability and Fault Tolerance One of the primary benefits of using ELB is the high availability it provides. By automatically distributing traffic to healthy instances across multiple availability zones, ELB ensures that your application remains available even in case of instance failures or traffic spikes. This minimizes downtime and ensures business continuity. Cross-zone Load Balancing: ELB can route traffic to instances in different availability zones within a region. This prevents a single point of failure and enhances availability. Automatic Health Checks: ELB continuously monitors the health of your instances. If an instance becomes unhealthy, traffic is rerouted to healthy instances, ensuring minimal disruption. 2. Scalability AWS ELB integrates seamlessly with Auto Scaling, enabling applications to automatically scale up or down based on demand. Whether it’s a sudden surge in traffic or a drop in user activity, ELB can adapt to these changes by adding or removing instances without manual intervention. Elastic Scaling: When traffic spikes, ELB distributes it across additional instances. Conversely, as demand decreases, instances are removed from the load balancing pool, optimizing resource usage. 3. Security AWS ELB provides several security features that help secure your applications and protect sensitive data: SSL/TLS Termination: With ALB and NLB, you can configure SSL/TLS termination at the load balancer level, offloading the encryption work from your backend instances, thereby improving performance. Integration with AWS Web Application Firewall (WAF): ELB integrates with AWS WAF, which provides an additional layer of protection against common web exploits. Security Groups and Network ACLs: You can configure security groups and network access control lists (ACLs) for fine-grained control over inbound and outbound traffic. 4. Improved User Experience By optimizing traffic distribution, ELB can reduce latency and ensure consistent performance for end users. When combined with other AWS services like Amazon CloudFront for content delivery, users can experience faster load times regardless of their location. Global Load Balancing: With Amazon Route 53 and ELB, you can implement global load balancing to direct users to the closest available region, further improving latency. How Does AWS Elastic Load Balancing Work? AWS Elastic Load Balancing automatically distributes incoming traffic to targets such as EC2 instances, containers, or even on-premises servers. Here’s a closer look at how ELB works: Traffic Distribution: When users make a request to your application, the load balancer evaluates the incoming traffic and routes it to the appropriate backend resources based on the configured rules and routing algorithms. Health Monitoring: ELB continuously checks the health of registered targets. If a target is unhealthy, traffic is routed away from that target to ensure availability and performance. Routing Algorithms: ELB uses different routing algorithms based on the type of load balancer: ALB: Routes requests based on URL paths, HTTP methods, or host headers. NLB: Routes traffic based on IP addresses and TCP/UDP protocols. CLB: Uses round-robin or least-connections routing methods, depending on configuration. Types of AWS Elastic Load Balancers As mentioned, AWS offers three types of load balancers, each serving a different use case: 1. Application Load Balancer (ALB) The Application Load Balancer is best for routing HTTP/HTTPS traffic and is highly suitable for applications built using microservices and container-based architectures. Key features of ALB include: Content-based routing: ALB can route traffic based on request URL, headers, or even HTTP methods. Host-based routing: Route traffic based on the domain name or hostname. WebSocket support: ALB supports WebSocket connections, making it ideal for real-time applications. 2. Network Load Balancer (NLB) The Network Load Balancer is designed to handle large volumes of traffic with very low latency. It works at the transport layer (Layer 4) and can handle millions of requests per second. Key features include: Low latency: Ideal for performance-critical applications where every millisecond counts. TCP/UDP support: NLB can handle both TCP and UDP traffic, making it suitable for applications like gaming, VoIP, and real-time communication. Static IP support: NLB provides a static IP for your load balancer, which can simplify firewall management. 3. Classic Load Balancer (CLB) The Classic Load Balancer is the original AWS ELB option and works at both the transport and application layers. While AWS now recommends using ALB or NLB, CLB can still be useful for legacy applications that require simpler configurations. Basic routing: CLB uses simple round-robin or least-connections algorithms to route traffic.

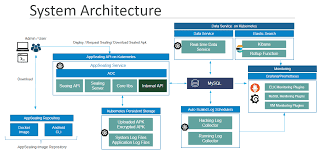

The Role of AWS in Modern DevOps Practices: Enhancing Efficiency and Collaboration

The Role of AWS in Modern DevOps Practices: Enhancing Efficiency and Collaboration Introduction In today’s fast-paced tech landscape, organizations are constantly seeking ways to accelerate software development, improve quality, and ensure continuous delivery. This is where DevOps comes in. DevOps is a set of practices aimed at bridging the gap between development and operations, focusing on automation, collaboration, and faster delivery cycles. One of the major enablers of DevOps success is cloud computing, and Amazon Web Services (AWS) has proven to be one of the most powerful platforms in facilitating modern DevOps practices. With its wide range of services, AWS provides tools that automate processes, enhance collaboration, and allow teams to focus on delivering value rather than managing infrastructure. In this blog, we’ll explore the role of AWS in DevOps, how it supports automation, scalability, and monitoring, and how organizations can leverage AWS to implement effective DevOps strategies. What is DevOps? Before diving into the AWS-specific tools, let’s clarify what DevOps is and why it’s essential. At its core, DevOps is about fostering a culture of collaboration between development (Dev) and operations (Ops) teams. The goal is to streamline the development pipeline, from code creation to production deployment, enabling continuous integration (CI) and continuous delivery (CD). Key components of DevOps include: Automation: Automating repetitive tasks such as testing, deployments, and infrastructure provisioning. Collaboration: Enhancing communication between development and operations teams. Monitoring: Tracking the performance of applications in real-time to identify issues early. Speed: Reducing the time it takes to develop, test, and deploy new features. Now, let’s look at how AWS provides the tools and services that help achieve these goals. How AWS Supports DevOps AWS offers a wide range of cloud services that align perfectly with the needs of a modern DevOps pipeline. From automating infrastructure provisioning to facilitating continuous integration, AWS provides the necessary building blocks to implement DevOps practices efficiently. 1. Infrastructure as Code (IaC): Automating Infrastructure with AWS One of the pillars of DevOps is Infrastructure as Code (IaC). With IaC, developers can automate the provisioning and management of infrastructure, ensuring that environments are consistent, repeatable, and scalable. AWS CloudFormation is a key service for IaC. It allows you to define your entire AWS infrastructure using code (in YAML or JSON). CloudFormation templates enable you to version control infrastructure, test changes, and deploy resources at scale. Key benefits of AWS CloudFormation for DevOps: Consistent environments: Create identical environments for development, testing, and production. Version-controlled infrastructure: Maintain history and easily roll back to previous configurations. Automation: Automate provisioning, scaling, and management of resources, reducing human errors. 2. Continuous Integration and Continuous Deployment (CI/CD) Continuous Integration and Continuous Deployment (CI/CD) are essential practices for ensuring quick and reliable software delivery. AWS offers several services to implement CI/CD pipelines effectively: AWS CodePipeline: Automates the workflow for building, testing, and deploying applications. With CodePipeline, you can define the stages of your software release process, such as source, build, test, and deploy, and then automate the transitions between these stages. AWS CodeBuild: A fully managed build service that compiles code, runs tests, and produces software packages. CodeBuild integrates with CodePipeline to automatically trigger builds as part of your deployment process. AWS CodeDeploy: Automates code deployment to various environments, such as EC2 instances, Lambda functions, and on-premises servers. CodeDeploy ensures that updates happen without downtime and with minimal manual intervention. By integrating CodePipeline, CodeBuild, and CodeDeploy, AWS provides an end-to-end solution for implementing CI/CD pipelines that help teams deliver software faster and more reliably. 3. Monitoring and Logging: Ensuring Continuous Feedback Continuous monitoring is a critical component of DevOps, as it ensures teams have real-time visibility into the performance of their applications and infrastructure. AWS offers several services to help monitor applications and resources: Amazon CloudWatch: Provides real-time monitoring of AWS resources and applications. CloudWatch allows you to set up custom alarms for critical metrics such as CPU usage, memory utilization, and error rates. These alarms can trigger automated responses, such as scaling instances or notifying teams of issues. AWS X-Ray: A tool that helps developers analyze and debug distributed applications. X-Ray helps identify performance bottlenecks, errors, and latencies in microservices architectures, making it easier for DevOps teams to resolve issues faster. AWS CloudTrail: Logs all API calls made within your AWS environment, enabling visibility into changes to your resources. This is essential for auditing and ensuring security compliance. Having a robust monitoring setup with CloudWatch, X-Ray, and CloudTrail enables teams to receive continuous feedback, detect issues early, and improve the reliability of their systems. 4. Containerization and Orchestration: Simplifying Deployment with AWS As DevOps evolves, containerization has become a key practice for achieving portability and scalability in applications. AWS provides several services to support container-based deployments: Amazon Elastic Container Service (ECS): A fully managed container orchestration service that allows you to run and scale Docker containers easily. ECS integrates seamlessly with other AWS services, enabling automated deployments in a secure environment. Amazon Elastic Kubernetes Service (EKS): A managed service for running Kubernetes, an open-source container orchestration platform. EKS automates many aspects of Kubernetes management, making it easier to deploy and scale containerized applications. AWS Fargate: A serverless compute engine for containers. Fargate allows you to run containers without managing the underlying EC2 instances. This is ideal for DevOps teams looking to simplify infrastructure management while maintaining scalability. By leveraging AWS containerization services, DevOps teams can deploy applications consistently, scale them as needed, and automate many aspects of the deployment pipeline. Best Practices for Implementing DevOps with AWS While AWS provides all the tools needed for DevOps success, it’s important to follow best practices to ensure that your processes are efficient, scalable, and secure. 1. Adopt a Microservices Architecture Microservices architecture aligns well with DevOps principles by breaking down applications into smaller, more manageable services. This allows for easier deployment, scaling, and independent service updates. With AWS, you can leverage services like ECS, EKS, and Lambda (for serverless computing) to manage microservices more efficiently. 2. Automate Everything DevOps is all about

Using AWS CloudFormation for Infrastructure as Code (IaC)

Using AWS CloudFormation for Infrastructure as Code (IaC) Introduction In today’s rapidly evolving cloud landscape, automation is key to scaling and managing infrastructure efficiently. Infrastructure as Code (IaC) has revolutionized how organizations deploy, manage, and scale resources, enabling consistency, speed, and improved collaboration. Among the various IaC tools available, AWS CloudFormation stands out as one of the most powerful and popular solutions. It allows you to define your AWS resources in a declarative way, reducing the complexity of managing cloud infrastructure. But what exactly is CloudFormation, and how can you leverage it for your infrastructure needs? In this blog, we’ll explore AWS CloudFormation, how it works, its key features, and provide actionable tips on how to use it effectively for automating your infrastructure deployment on AWS. What is AWS CloudFormation? AWS CloudFormation is a service that helps you define and provision AWS infrastructure resources using code, or more specifically, through JSON or YAML templates. These templates describe the AWS resources needed for your application, such as EC2 instances, S3 buckets, RDS databases, and more. With CloudFormation, you can: Automate the provisioning and management of AWS resources. Ensure consistency and repeatability in your infrastructure setup. Maintain version control for your infrastructure templates, just like you do with application code. Easily deploy complex environments with a single command. CloudFormation helps reduce the manual effort needed for managing cloud resources, allowing you to focus on building and running your application rather than configuring the infrastructure manually. Key Benefits of Using AWS CloudFormation for IaC 1. Simplified Infrastructure Management CloudFormation simplifies infrastructure management by enabling you to declare the desired state of your infrastructure. Instead of logging into the AWS console and manually configuring resources, you define your setup in a template and let CloudFormation handle the rest. 2. Version Control and Collaboration With CloudFormation, your infrastructure configuration is stored as code. This makes it possible to track changes, revert to previous versions, and collaborate effectively with team members. By using a source control system (like Git), you can manage the lifecycle of your infrastructure similarly to application code. 3. Consistency and Reliability By using CloudFormation templates, you ensure that your infrastructure is always deployed in a consistent manner, whether you are deploying to a test, staging, or production environment. Templates help reduce the risk of human error in manual configurations. 4. Scalable Infrastructure CloudFormation integrates seamlessly with other AWS services, enabling you to scale your infrastructure as your application grows. Whether you’re provisioning a single EC2 instance or an entire architecture, CloudFormation makes it easy to scale resources up or down in a repeatable manner. 5. Cost Efficiency CloudFormation automates the creation and deletion of AWS resources. This helps you to provision only the resources you need, minimizing unnecessary costs. Additionally, it allows for stack deletion, which automatically removes all associated resources when no longer needed, further reducing wastage. How AWS CloudFormation Works At its core, CloudFormation works by using templates that define the AWS resources needed for your application. These templates are in either JSON or YAML format and can be created manually or generated using the AWS Management Console or AWS CLI. When you run a CloudFormation template, AWS automatically provisions the necessary resources in the correct order, taking care of dependencies and making sure that everything is configured as per the template. The infrastructure is referred to as a stack, and you can easily create, update, and delete stacks. Basic CloudFormation Workflow: Create Template: Write a CloudFormation template describing your desired infrastructure. Launch Stack: Use the AWS Console, AWS CLI, or AWS SDKs to create a stack based on the template. Resource Creation: CloudFormation provisions resources such as EC2 instances, load balancers, databases, etc. Monitor and Manage: Use the AWS Console or CLI to track the status and events of your stack. Updates or deletions can be performed as needed. Stack Deletion: When the infrastructure is no longer needed, you can delete the stack, which also removes all associated resources. CloudFormation Template Structure CloudFormation templates follow a defined structure that consists of several sections. Below is an overview of the primary components: Resources: The main section of the template, where the actual AWS resources (e.g., EC2 instances, VPCs, S3 buckets) are defined. Parameters: Allows users to specify values when creating a stack. For instance, you can define parameters for EC2 instance types or Amazon Machine Images (AMIs). Outputs: Defines the output values that CloudFormation returns after the stack is created, such as the public IP address of an EC2 instance. Mappings: Define custom values to be used for lookups in the template, such as mapping region names to specific AMI IDs. Conditions: Define conditions that control whether certain resources are created or certain properties are applied. Metadata: Can be used to include additional information about the resources defined in the template. How to Create a CloudFormation Template Here’s a step-by-step guide to creating a simple CloudFormation template to deploy an EC2 instance: Step 1: Write the Template For this example, we’ll use YAML format. AWSTemplateFormatVersion: ‘2010-09-09’ Resources: MyEC2Instance: Type: ‘AWS::EC2::Instance’ Properties: InstanceType: t2.micro ImageId: ami-0c55b159cbfafe1f0 # Replace with your desired AMI ID KeyName: MyKeyPair Step 2: Validate the Template Before launching the template, it’s important to validate it to ensure there are no syntax errors. You can do this in the AWS Console or through the CLI using: aws cloudformation validate-template –template-body file://template.yaml Step 3: Launch the Stack Once your template is validated, you can launch a stack using the AWS Management Console, CLI, or AWS SDKs. For the CLI, use: aws cloudformation create-stack –stack-name MyStack –template-body file://template.yaml Step 4: Monitor and Manage the Stack After launching the stack, you can monitor its progress in the AWS Console or by using the CLI: aws cloudformation describe-stacks –stack-name MyStack Advanced CloudFormation Features 1. Change Sets Change Sets allow you to preview changes before applying them to a stack. This is useful for understanding how modifications will affect your infrastructure. 2. StackSets StackSets allow you to manage CloudFormation stacks across multiple

Comparing AWS S3 and EBS: Which Storage Solution is Right for You?

Comparing AWS S3 and EBS: Which Storage Solution is Right for You? Introduction When architecting applications on AWS, selecting the right storage solution is crucial for optimizing performance, cost, and scalability. Amazon Web Services (AWS) offers multiple storage options, with Amazon Simple Storage Service (S3) and Amazon Elastic Block Store (EBS) being two of the most widely used services. Each of these solutions caters to different storage needs and comes with its unique features, benefits, and use cases. In this blog, we’ll dive into the differences between AWS S3 and EBS to help you understand which option best suits your requirements. Whether you’re building scalable web applications, managing backups, or running databases, we’ll provide a detailed comparison to make your choice easier. What is AWS S3? Amazon S3 is an object storage service designed for storing and retrieving large amounts of data from anywhere on the web. It offers virtually unlimited storage capacity and is known for its durability, availability, and scalability. Key Features of AWS S3: Object Storage: S3 stores data as objects (files), which include the data itself, metadata, and a unique identifier (key). Scalability: S3 is highly scalable, enabling you to store virtually unlimited data. Durability and Availability: S3 is designed to provide 99.999999999% durability over a given year and 99.99% availability for every object stored. Security: Offers fine-grained access control, encryption, and integration with AWS Identity and Access Management (IAM). Low Latency and High Throughput: S3 is designed for high-speed data transfer, making it ideal for data lakes, backups, and static website hosting. Use Cases for S3: Data Lakes and Big Data Analytics: Store massive datasets for analytics or machine learning. Backup and Archiving: Ideal for cost-effective backups, disaster recovery, and archiving. Static Website Hosting: Host static files like images, videos, HTML, CSS, and JavaScript files. What is AWS EBS? Amazon EBS is a block-level storage service designed to be used with Amazon EC2 instances. EBS is ideal for applications that require persistent, low-latency block-level storage, such as databases and enterprise applications. Key Features of AWS EBS: Block Storage: EBS provides block-level storage volumes that are attached to EC2 instances and behave like raw, unformatted storage. Persistent Storage: Data stored in EBS volumes persists beyond instance termination, making it suitable for mission-critical applications. Performance: EBS offers different types of storage, such as SSD-backed volumes for high-performance workloads and HDD-backed volumes for throughput-oriented storage. Snapshots: You can create snapshots of your EBS volumes for backup or disaster recovery purposes. Use Cases for EBS: Databases: EBS is well-suited for databases that require low-latency, high-performance storage. Enterprise Applications: Applications such as SAP, Microsoft SQL Server, and other transactional systems. File Systems: EBS is ideal for applications that need a traditional file system with consistent and low-latency access to data. Key Differences Between AWS S3 and EBS Now that we’ve introduced both services, let’s dive into the key differences to help you determine which storage solution suits your needs. 1. Type of Storage S3: Object storage — suitable for storing unstructured data such as images, videos, backups, and logs. EBS: Block storage — used for data that requires a file system and is often used by EC2 instances for running databases and applications. When to Use: Use S3 if you need scalable and durable storage for large amounts of data that doesn’t require a file system. Use EBS for data that requires low-latency, high-performance block-level access, such as databases and file systems. 2. Scalability S3: Highly scalable with virtually unlimited storage. You can store any amount of data and access it from anywhere in the world. EBS: Scales vertically with a limit of 16 TiB per volume. EBS volumes can be attached to EC2 instances, but their scalability is restricted compared to S3. When to Use: S3 is a better choice when you need to store large amounts of unstructured data or need auto-scaling capabilities. EBS is suitable for high-performance workloads where scaling requirements are predictable and well-defined. 3. Performance and Latency S3: Offers excellent throughput for data-intensive applications but may have higher latencies than block storage. EBS: Provides low-latency, high-performance storage, making it ideal for applications that require consistent and fast access to data, such as databases. When to Use: Choose EBS if you need consistent low-latency storage for real-time applications or databases. S3 is better suited for high-throughput applications where occasional latency is acceptable. 4. Data Access and Integration S3: Accessible over HTTP/HTTPS through the AWS Management Console, APIs, and SDKs. It’s well-suited for use cases where you need to share or distribute large files globally. EBS: Accessed by EC2 instances directly. It can be formatted with a file system (like ext4, NTFS, or XFS) and used as a regular disk drive. When to Use: Use S3 when you need a simple, global access model for your data, such as serving static content or storing backup files. EBS is ideal when your application needs direct block-level access, such as running a file system or working with databases. 5. Durability and Availability S3: Designed for 99.999999999% durability and 99.99% availability. AWS replicates objects across multiple availability zones, making S3 extremely durable. EBS: Provides durability by storing data across multiple availability zones, but it is primarily designed for performance. It offers 99.9% availability. When to Use: If durability and high availability are paramount for your use case, S3 is the better choice. For EBS, while it offers good durability, it is not as robust as S3 for global distribution and long-term storage. 6. Pricing Model S3: Pricing is based on the amount of storage used, the number of requests made, and the data transfer volume. It’s cost-effective for large datasets that don’t require frequent access. EBS: Pricing is based on the volume size, IOPS, and storage type (e.g., SSD vs. HDD). EBS can be more expensive for high-performance workloads but provides better performance for intensive applications. When to Use: If you have large, infrequently accessed datasets or need to store backups and archives, S3 is the more cost-effective option.

Exploring the Benefits of Serverless Computing with AWS Lambda

Exploring the Benefits of Serverless Computing with AWS Lambda Introduction In today’s fast-paced digital world, developers and organizations are increasingly seeking ways to build applications more efficiently and scale them effortlessly. Traditional cloud architectures, while powerful, often require significant infrastructure management, leading to increased complexity and costs. This is where serverless computing comes into play, offering a revolutionary approach to application development. One of the most popular serverless solutions available is AWS Lambda. AWS Lambda allows you to run code in response to events without provisioning or managing servers. With Lambda, you can focus solely on writing your application code, while AWS handles all the operational overhead, such as scaling, patching, and resource management. In this blog, we’ll explore the core benefits of AWS Lambda, how it simplifies application development, and why it’s a game-changer for developers. What Is Serverless Computing? Before diving into the specifics of AWS Lambda, it’s important to understand the concept of serverless computing. Serverless computing is a cloud-native development model where developers write code without worrying about managing the underlying infrastructure. The cloud provider (such as AWS) automatically provisions, scales, and manages the servers required to run the code. The name “serverless” can be misleading; there are still servers involved, but the management and provisioning of those servers are abstracted away from the user. Key Characteristics of Serverless Computing: Event-driven: Serverless applications are typically event-driven, meaning that the application responds to triggers (such as HTTP requests, file uploads, or database changes). Pay-per-use: Serverless computing charges users based on the actual resources consumed by their functions, rather than allocating resources upfront. Auto-scaling: Serverless platforms automatically scale your application based on demand without requiring manual intervention. What Is AWS Lambda? AWS Lambda is a compute service that lets you run code without provisioning or managing servers. You simply upload your code (called a “Lambda function”), specify the event triggers (like an HTTP request or file upload), and AWS Lambda automatically handles the rest. It runs your code in response to events and scales the application as needed. Lambda supports multiple programming languages, including Node.js, Python, Java, and C#, and it integrates seamlessly with many other AWS services, such as Amazon S3, DynamoDB, and API Gateway. Key Benefits of AWS Lambda Now, let’s dive into the benefits of using AWS Lambda for your applications. 1. No Server Management One of the most significant advantages of AWS Lambda is that you don’t need to manage any servers. Traditionally, managing servers involves configuring the infrastructure, handling scaling, patching, and monitoring. With Lambda, AWS takes care of all this. You focus purely on writing and deploying your code, while Lambda handles the operational complexities behind the scenes. Key Benefits: Less overhead: No more worrying about server configurations or uptime. Faster development cycles: Spend more time building features and less time managing infrastructure. Automatic scaling: AWS Lambda scales automatically to accommodate any number of requests, so you don’t have to adjust anything as your application grows. 2. Cost-Effective AWS Lambda follows a pay-as-you-go pricing model. You only pay for the compute time you consume, measured in 100-millisecond increments. If your function doesn’t execute, you’re not charged. This is a huge cost-saver compared to traditional cloud services, where you pay for idle servers and resources. How It Saves Money: No idle resources: Unlike traditional cloud services where you pay for pre-allocated instances, Lambda charges only for the time your code is running. Cost scalability: AWS Lambda automatically scales based on demand. If the demand spikes, Lambda adjusts to handle the load without requiring manual intervention or additional costs for over-provisioned infrastructure. 3. Built-In High Availability High availability is critical for modern applications. With AWS Lambda, your application benefits from built-in fault tolerance and high availability across multiple availability zones. AWS ensures that your Lambda function is resilient to hardware failures and automatically handles retries and failures. This means you don’t have to worry about designing and managing complex failover systems, as AWS Lambda automatically distributes and replicates your code across different locations to ensure reliability. 4. Automatic Scaling AWS Lambda automatically adjusts to the workload by scaling your application up or down as needed. Whether you’re serving a few requests per minute or thousands per second, AWS Lambda automatically provisions the required compute capacity to meet the demand. You don’t need to set up additional infrastructure or worry about scaling manually. Key Benefits: Zero configuration scaling: No need to manually configure or provision resources to handle traffic spikes. Seamless growth: Lambda can handle increasing traffic without manual intervention or over-provisioning. 5. Faster Time to Market AWS Lambda simplifies the deployment and maintenance of serverless applications. Developers can quickly write, test, and deploy code in response to specific events. This rapid iteration cycle allows for faster prototyping and faster time to market for new features or services. Key Benefits: Quick code deployment: No need to worry about complex server setup or resource allocation. Shorter development cycles: Since AWS handles the infrastructure, developers can focus on writing features and improving the product rather than configuring and maintaining servers. 6. Integrated with Other AWS Services AWS Lambda integrates seamlessly with a wide range of AWS services, allowing you to create complex, event-driven architectures with minimal effort. You can trigger Lambda functions in response to events in services like Amazon S3, DynamoDB, API Gateway, SNS, and more. For example, you can use Lambda to: Automatically resize images when they’re uploaded to S3. Process data as it flows into a Kinesis stream. Execute business logic when a new item is added to DynamoDB. These integrations allow you to build serverless applications with powerful, event-driven workflows. 7. Improved Developer Productivity AWS Lambda allows developers to write only the business logic needed to respond to specific events. The heavy lifting, like scaling and provisioning infrastructure, is abstracted away, enabling developers to focus on writing clean, concise code that delivers value to the user. Lambda also integrates well with popular development frameworks like Serverless Framework and AWS SAM, further streamlining

Leveraging AWS for Big Data Analytics: Tools and Techniques

Leveraging AWS for Big Data Analytics: Tools and Techniques Introduction As businesses increasingly rely on big data to drive decision-making, the need for efficient, scalable, and cost-effective analytics solutions has never been more urgent. Amazon Web Services (AWS) offers a wide array of tools specifically designed to help organizations process, analyze, and extract meaningful insights from vast amounts of data. Whether you’re dealing with structured data, unstructured data, or streaming data, AWS provides a flexible and powerful suite of services that can handle the demands of modern data analytics. In this blog, we’ll explore how AWS tools can be leveraged to power big data analytics, from storage and processing to analysis and visualization. You’ll learn the key AWS services that enable big data workflows and how to implement them to maximize your organization’s data capabilities. What Is Big Data Analytics? Big data analytics refers to the process of examining large and varied data sets—often from multiple sources—to uncover hidden patterns, correlations, market trends, and other valuable insights. These insights help organizations make informed decisions, predict outcomes, and even automate processes. However, handling big data requires specialized tools and infrastructures, which is where AWS shines. Key AWS Tools for Big Data Analytics AWS provides an extensive toolkit that covers the entire data analytics pipeline—from data storage and processing to querying and visualizing insights. Let’s dive into some of the most widely used AWS tools for big data analytics. 1. Amazon Redshift: Data Warehousing at Scale Amazon Redshift is AWS’s fully managed data warehouse solution, optimized for running complex queries on massive datasets. It’s designed for analytics workloads that require high performance and scalability, providing businesses with a way to store and analyze large amounts of structured data. Key Benefits: Scalability: Redshift scales seamlessly to handle petabytes of data. Performance: With features like columnar storage and parallel query execution, Redshift can handle complex queries quickly. Integration: Redshift integrates easily with other AWS services like Amazon S3 for storage and AWS Glue for data preparation. When to Use: Redshift is ideal for businesses that need to store large amounts of structured data and perform complex analytics or reporting. 2. Amazon EMR: Managed Hadoop and Spark Amazon EMR (Elastic MapReduce) is a managed cluster platform that allows users to process vast amounts of data quickly and cost-effectively using big data frameworks like Apache Hadoop, Apache Spark, and Apache Hive. It simplifies the setup of big data clusters and reduces the need for manual configuration. Key Benefits: Scalability: EMR clusters can be easily scaled up or down based on the workload. Cost-Effective: You pay only for the compute and storage resources you use, making it a flexible solution. Integration with AWS: EMR integrates with other AWS services, like Amazon S3 for storage and AWS Lambda for serverless computing. When to Use: EMR is ideal for businesses that need to perform large-scale data processing tasks, such as data transformation, machine learning, or log analysis. 3. Amazon Athena: Serverless Querying of S3 Data Amazon Athena is a serverless interactive query service that allows users to analyze data directly in Amazon S3 using SQL queries. Athena automatically scales to execute queries on large datasets without the need to manage any infrastructure. Key Benefits: Serverless: You don’t need to provision or manage servers, making it a hassle-free tool for querying large datasets. Cost-Efficient: You pay only for the queries you run, based on the amount of data scanned. Fast: Athena is optimized for fast query execution, particularly on structured data stored in S3. When to Use: Athena is great for businesses that need to run ad-hoc queries on large datasets stored in S3 without having to manage infrastructure. 4. Amazon Kinesis: Real-Time Data Processing Amazon Kinesis is a suite of services designed to collect, process, and analyze streaming data in real-time. Kinesis can ingest data from a variety of sources, including social media feeds, IoT devices, and website interactions, and provide real-time analytics. Key Benefits: Real-Time: Kinesis processes data in real-time, making it ideal for use cases like real-time analytics and monitoring. Scalable: Kinesis scales automatically to accommodate varying data volumes. Integration: Kinesis integrates with AWS analytics services, including AWS Lambda, Redshift, and Athena. When to Use: Kinesis is perfect for businesses needing to process real-time streaming data, such as live video streams, social media feeds, or sensor data. Techniques for Leveraging AWS for Big Data Analytics Now that we’ve covered the core AWS services, let’s discuss some effective techniques for leveraging these tools in your big data analytics workflows. 1. Data Storage Best Practices with Amazon S3 AWS S3 serves as the backbone for many big data solutions, offering highly durable and scalable storage for data of all sizes. To ensure efficient use of S3 in your big data workflows, follow these best practices: Organize Data: Use a hierarchical folder structure to organize large datasets. This can make it easier to manage and query. Versioning: Enable versioning to protect against accidental data loss and to track changes over time. Lifecycle Policies: Use S3 lifecycle policies to move infrequently accessed data to cheaper storage tiers, such as S3 Glacier, to optimize costs. 2. Data Transformation with AWS Glue AWS Glue is a fully managed ETL (Extract, Transform, Load) service that automates much of the data transformation process. When dealing with raw, unstructured, or semi-structured data, Glue can clean, enrich, and prepare it for further analysis. Techniques: Schema Discovery: Glue automatically discovers the schema of your data, making it easy to integrate diverse data sources. Job Scheduling: Use Glue’s job scheduler to automate ETL workflows, reducing manual intervention and improving consistency. Data Catalog: Glue’s Data Catalog can serve as a centralized repository for metadata, enabling easy access and management of your data. 3. Data Analytics at Scale with Redshift Spectrum Redshift Spectrum allows users to query data directly from Amazon S3 using Redshift without the need to load the data into the warehouse. This enables analytics on massive datasets stored in S3 with the power of Redshift’s query engine.

How to Architect a Scalable Application on AWS

How to Architect a Scalable Application on AWS Introduction Building a scalable application is one of the main reasons companies migrate to the cloud. Amazon Web Services (AWS) provides a broad set of tools to ensure that applications can scale efficiently and cost-effectively. Whether you’re building a simple web app or a complex enterprise solution, AWS offers a suite of services that can support your application’s growth while keeping performance high and costs low. In this guide, we’ll walk you through the key components and best practices to architect a scalable application on AWS. From designing an architecture that supports scalability to implementing the right services for load balancing, storage, and automation, you’ll gain practical insights to start building your own cloud-based solution. What Is a Scalable Application? Before we dive into AWS-specific solutions, let’s first define what scalability means in the context of application architecture. A scalable application is one that can efficiently handle an increasing load by adjusting resources without compromising performance. There are two primary types of scalability: Vertical Scalability: Increasing the power (CPU, RAM, etc.) of a single server. Horizontal Scalability: Adding more servers to distribute the load, often referred to as “scaling out.” AWS services are designed to support both vertical and horizontal scaling, with a strong emphasis on horizontal scalability, which is key to building highly available applications. Step 1: Design for Elasticity One of the main benefits of AWS is its ability to automatically scale based on demand. To design a scalable application, focus on services and features that offer elasticity. Key AWS Services for Elasticity EC2 Auto Scaling: EC2 instances can automatically scale up or down based on predefined metrics like CPU usage or request count. This ensures that your application only uses the resources it needs, which also helps reduce costs. Elastic Load Balancer (ELB): ELB automatically distributes incoming traffic across multiple EC2 instances. It ensures that no single server becomes overwhelmed, preventing downtime during traffic spikes. Amazon S3 (Simple Storage Service): For static file storage (images, videos, backups), S3 is highly scalable. You don’t need to worry about manually adding capacity, as S3 grows with your data needs. Step 2: Implement Stateless Architecture Stateless applications are easier to scale. In a stateless architecture, every request is treated as independent, with no reliance on prior interactions. This makes it easier to distribute the load evenly across servers. How to Achieve Statelessness on AWS Decouple Your Application: Use services like AWS Lambda, SQS (Simple Queue Service), or SNS (Simple Notification Service) to handle events asynchronously and reduce tight dependencies between components. Use Amazon RDS and DynamoDB: AWS offers fully managed databases that can scale to meet demand. Amazon RDS provides relational databases like MySQL and PostgreSQL, while DynamoDB offers a NoSQL solution that automatically scales based on the volume of requests. Store Session Data Externally: Use Amazon ElastiCache or DynamoDB to store session data instead of relying on local memory or disk on your servers. This ensures that the state is maintained regardless of which instance is handling the request. Step 3: Use Distributed Systems and Microservices To truly unlock the potential of AWS, consider adopting microservices architecture. Microservices are small, independent services that communicate over a network, allowing teams to scale, develop, and deploy parts of the application independently. Key AWS Tools for Microservices Amazon ECS (Elastic Container Service) or EKS (Elastic Kubernetes Service): These services allow you to run containerized applications and manage microservices efficiently. You can quickly scale up or down based on demand. AWS Lambda: For even smaller, event-driven applications, AWS Lambda allows you to run code without provisioning or managing servers. It automatically scales based on incoming requests. Amazon API Gateway: API Gateway helps manage the communication between microservices. It also allows you to throttle API requests, ensuring that your microservices can handle spikes in traffic. Step 4: Set Up Auto Scaling and Load Balancing Auto scaling and load balancing are critical components to handling large amounts of traffic. How to Set Up Auto Scaling on AWS Create Auto Scaling Groups: Auto Scaling Groups allow you to define the minimum and maximum number of EC2 instances you want running. The system will automatically add or remove instances based on metrics you define (like CPU utilization or network traffic). Use Elastic Load Balancing: Combine Auto Scaling with Elastic Load Balancing to distribute incoming traffic. ELB can balance the load between instances within a scaling group, ensuring high availability. Best Practices for Load Balancing Use multiple Availability Zones (AZs) to ensure high availability. Deploy EC2 instances across at least two AZs. Monitor and adjust scaling policies based on your traffic patterns. For example, you may need to scale faster during peak traffic hours. Implement sticky sessions (when necessary) to ensure that a user’s session is always routed to the same instance. Step 5: Implement Fault Tolerance and Disaster Recovery A scalable application must also be fault-tolerant and able to recover from failures. AWS provides several services to implement fault tolerance and disaster recovery. Key AWS Services for Fault Tolerance Amazon RDS Multi-AZ: RDS can automatically create a replica of your database in another AZ. In the event of a failure, RDS can switch to the backup database with minimal downtime. Amazon S3 and Glacier for Backup: Store backups in S3 for immediate access or Amazon Glacier for long-term storage. These services are designed to be highly durable and cost-efficient. AWS Route 53 for DNS Failover: Use Route 53 to route traffic to healthy endpoints in the event of a failure. If one server becomes unavailable, Route 53 can automatically redirect traffic to another instance or region. Step 6: Optimize for Cost Efficiency Scalability isn’t just about handling traffic—it’s also about doing so in a cost-effective manner. AWS provides several tools to help optimize costs while maintaining scalability. Cost-Optimization Tips Choose the Right EC2 Instance Type: AWS offers different instance types optimized for compute, memory, and storage. Choose the one that aligns with your application’s needs to avoid overprovisioning.